Создание динамической СХД по запросу пользователя

Для того чтобы пользовательская задача могла осуществлять ввод/вывод данных в специально созданную динамическую СХД необходимо перед запуском задачи оформить запрос на создание динамической СХД и настроить параметры обмена данными между создаваемой СХД и внешним окружением. Для этих целей необходимо добавить дополнительные поля в скрипт sbatch, применяемый для работы с планировщиком задач slurm (см. ниже п. 2-4):

#!/bin/bash

#### 1. SCHEDULER INITIATION

#

# request for 4 nodes

#SBATCH -N 4

# request for 1 day and 3 hours of run time

#SBATCH -t 1-03:00:00

#### 2. STORAGE INITIATION

# Capacity of required storage and its type

#

# type=scratch,cached

# type=scratch means that data movement will be explicitly

# requested using DataWarp job script - only supported

# type=cached means that all movement

# of data between the instance and the PFS is done

# implicitly. The instance configuration and optional API

# can change the implicit behavior.

#

# access_mode=striped,private,ldbalanced

# access_mode=striped means that individual files are

# striped across multiple DataWarp server nodes

# (aggregating both capacity and bandwidth per file) and

# are accessible by all compute nodes using the instance

#

# access_mode=private means that individual files reside

# on one DataWarp server node (aggregating both

# capacity and bandwidth per instance). For scratch

# instances the files are only accessible to the compute

# node that created them (e.g. like /tmp); for cached

# instances the files are accessible to all compute nodes

# (via the underlying PFS namespace) but there is no

# coherency between compute nodes or between compute

# nodes and the PFS. Private access is not supported for

# persistent instances because a persistent instance can be

# used by multiple jobs with different numbers of

# compute nodes.

#

# access_mode=ldbalanced means that individual

# files are replicated (read only) on multiple DataWarp

# server nodes (aggregating bandwidth but not capacity

# per instance) and compute nodes choose one of the

# replicas to use. Loadbalanced mode is useful when the

# files are not large enough to stripe across a sufficient

# number of nodes.

#

# pool=<poolname>

# pool means diskpool with same granularity (all disks in pool is the same size)

#DW jobdw type=scratch capacity=1GB access_mode=striped pool=gran2

#### 3. STORAGE STAGEIN

# Input data path which should be copied from cold tier to the new storage-on-demand (e.g. config file for MPI application)

# type can be 'file' or 'directory'

# The location on burst buffer system to store I/O data is usually set to the value of variable

# DW_JOB_PRIVATE or DW_JOB_STRIPED, depending on the access_mode set in the batch script.

#DW stage_in type=file source=/home/rsc/data.in destination=$DW_JOB_STRIPED/data.in

#### 4. ENVIRONMENT INITIATION

# unload any previously loaded modules

module purge > /dev/null 2>&1

# Load environment modules suitable for the job

module load mpi/intel

#### 5. APPLICATION RUN

# run MPI rank on nodes

srun --ntasks-per-node=1 ./my_app -i $DW_JOB_STRIPED/data.in -o $DW_JOB_STRIPED/data.out

#### 6. STORAGE STAGEOUT

# Output data path which should be copied from the new storage-on-demand back to cold tier (e.g. result of MPI program)

#DW stage_out type=file source=$DW_JOB_STRIPED/data.out destination=/home/rsc/data.out

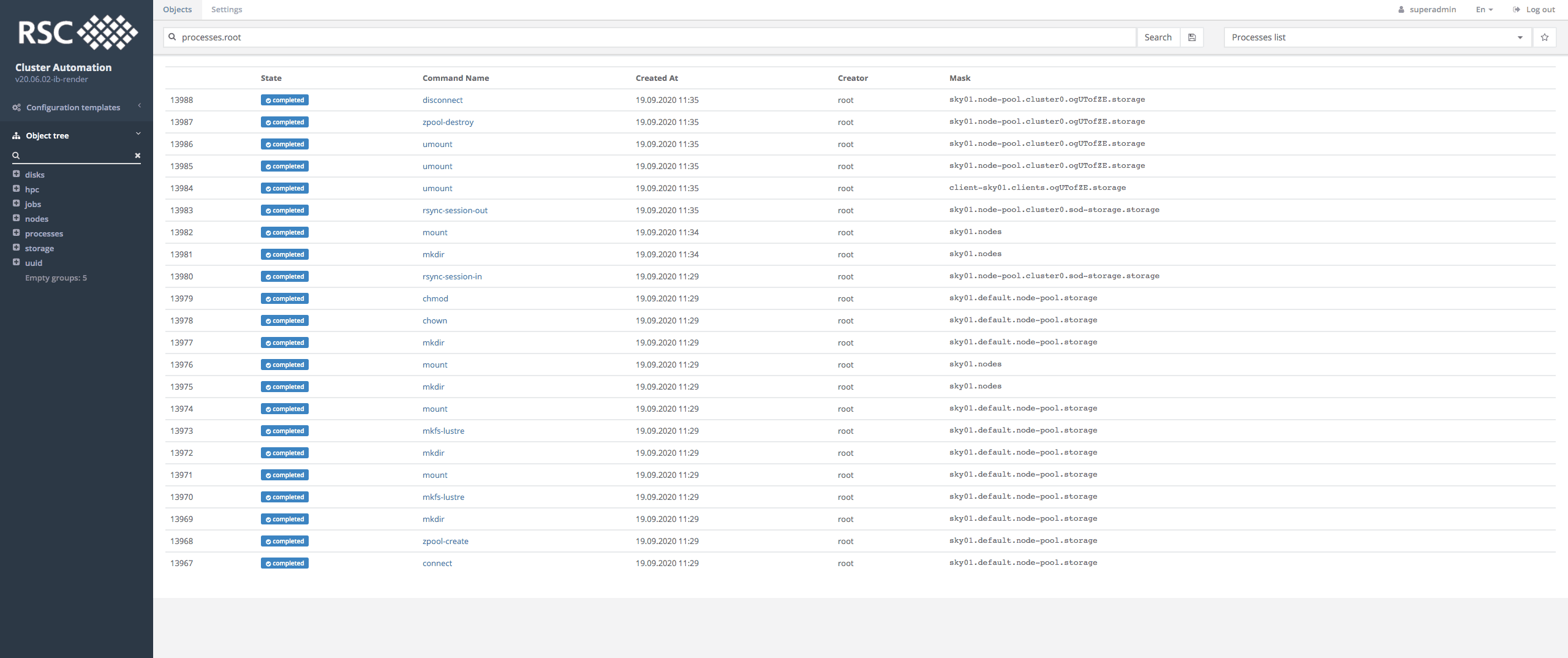

Процессы

Демонстрация работы с динамической СХД Lustre

Ниже приводится пример запуска задачи, использующей динамически созданную СХД Lustre:

# JOB BODY

root@head1.hpc3:/home/rsc$ cat job.sh

#!/bin/bash

#DW jobdw capacity=2TB type=scratch access_mode=striped pool=gran2

#DW stage_in type=file source=/home/rsc/data.in destination=$DW_JOB_STRIPED/data.in

srun realpath $DW_JOB_STRIPED/data.in

srun ls $DW_JOB_STRIPED/data.in

srun md5sum $DW_JOB_STRIPED/data.in > $DW_JOB_STRIPED/data.out

srun realpath $DW_JOB_STRIPED/data.out

#DW stage_out type=file source=$DW_JOB_STRIPED/data.out destination=/home/rsc/data.out

# INPUT DATA

root@head1.hpc3:/home/rsc$ cat data.in

hello

# JOB LAUNCH

root@head1.hpc3:/home/rsc$ sbatch -N1 -t 10 -w sky01 job.sh

Submitted batch job 5288

# JOB OUTPUT

root@head1.hpc3:/home/rsc$ cat slurm-5288.out

/tmp/mnt/sod/5288_job/global/data.in

/tmp/mnt/sod/5288_job/global/data.in

/tmp/mnt/sod/5288_job/global/data.out

# CHECK JOB CONSISTENT

root@head1.hpc3:/home/rsc$ md5sum data.in

b1946ac92492d2347c6235b4d2611184 data.in

# JOB OUTPUT DATA from Storage-on-Demand

root@head1.hpc3:/home/rsc$ cat data.out

b1946ac92492d2347c6235b4d2611184 /tmp/mnt/sod/5288_job/global/data.in

Пример sbatch скрипта

#!/bin/bash

#DW jobdw capacity=1GB type=scratch access_mode=striped pool=lustrepool

#DW stage_in type=file source=/home/danil_e71/data.in destination=$DW_JOB_STRIPED/data.in

srun echo $DW_JOB_STRIPED

srun realpath $DW_JOB_STRIPED/data.in

srun ls $DW_JOB_STRIPED/data.in

srun md5sum $DW_JOB_STRIPED/data.in > $DW_JOB_STRIPED/data.out

srun realpath $DW_JOB_STRIPED/data.out

srun echo "Go out"

#DW stage_out type=file source=$DW_JOB_STRIPED/data.out destination=/home/danil_e71/data.out